AI Governance Boards: Why They Are Now the Critical Control Layer for Copilots and Chatbots

You now live in a world where copilots and chatbots help with work. AI Governance Boards are very important when you use these systems. Many leaders want to use ai faster, but only 16% are happy with how fast it is going.

|

Statistic |

Value |

|---|---|

|

Satisfaction with AI adoption pace |

16% |

|

Percentage wanting faster adoption |

44% |

Most groups do not see all the risks of ai. Shadow ai projects, model drift, and hidden costs can mess up your plans. More than half of groups—55%—already have ai boards to stop these problems. You need strong rules to handle ai risks, follow laws, and keep your business safe.

-

Shadow ai and rogue projects can break rules.

-

Model drift can cause mistakes and bias.

-

No standards for working together slows things down.

Key Takeaways

-

AI governance boards are very important for handling risks with AI systems like copilots and chatbots. Regular checks help find problems early. This makes sure the company follows laws and keeps the business safe. Having strong governance can help the company make more money. It can also make customers happier. Watching and improving AI systems all the time is key for safety and fairness. Making teams with different kinds of people helps bring new ideas. It also makes sure AI is used in the right way.

The Rapid Growth of AI Copilots and Chatbots

Enterprise Adoption Trends

You see ai copilots and chatbots everywhere now. Companies want to use ai to help people work faster and smarter. You can find ai copilots in email, documents, and even in meetings. Chatbots answer questions, help with customer service, and guide users through tasks. Many businesses add ai to their daily tools because they want to save time and reduce mistakes.

You notice that more teams use ai for things like writing, searching, and making decisions. Some companies use ai to check data or spot problems before they grow. You might see ai copilots helping with reports or giving tips during work. Chatbots now handle more requests, so people can focus on harder jobs.

Note: When you use ai in many places, you need to watch for new problems. Fast growth means you must pay attention to how ai changes your work.

New Risks in AI Deployments

When you add ai copilots and chatbots, you face new risks. Some risks are easy to see, but others hide until they cause trouble. You need to know what can go wrong so you can protect your business.

-

Adversarial machine learning attacks can trick ai into making bad choices.

-

Data poisoning puts fake or harmful data into ai training, which can change how ai acts.

-

Prompt injection lets someone change what large language models do by using special words or phrases.

-

Supply chain attacks target the tools and code you use to build ai, which can lead to bigger problems.

Adversarial attacks can make ai misclassify important data. This mistake can cause big issues, like sharing private information by accident. Data poisoning can sneak into your ai models and make them act in ways you do not expect. Prompt injection can change how chatbots answer, sometimes giving wrong or unsafe advice. Supply chain attacks can break trust in your ai systems by changing the code before you even use it.

You must stay alert and set up strong rules for ai. If you do not, these risks can spread fast and hurt your business.

Why AI Governance Boards Matter

Oversight and Accountability

You need strong ai oversight for your business. Ai governance boards are the last line of defense. These boards check every ai project before launch. They include people from legal, ethics, data science, compliance, and product teams. This group helps you find problems early and set clear rules.

-

80% of executives do not trust vendor ai claims without formal governance.

-

70% have seen at least one ai project fail because they did not have proper ai oversight.

-

Companies with ai governance boards are twice as likely to see a return on investment within a year.

Ai governance helps you follow important steps. Boards check if your ai uses the right frameworks. They make sure you run risk checks and keep decisions open. You must watch your ai models all the time to keep them safe and fair. Ai oversight should start with every project.

-

Risk checks and open decisions build trust.

-

Ongoing model checks keep you accountable.

-

You should never forget about governance.

Using conversational ai brings new risks. Boards help you handle these risks by setting rules and checking your ai. They make sure you do not miss hidden problems that could hurt your business.

Guardrails for Responsible AI

Ai governance boards set up guardrails to keep ai safe and fair. These guardrails use controls like data loss prevention, audit logs, explainability, and red-teaming. Boards use these tools to stop data leaks, track decisions, and test ai for weak spots.

|

Metric |

Description |

|---|---|

|

Data Classification Coverage |

Shows how much of your content is labeled by sensitivity, owner, and purpose. |

|

Mean Time to Detect and Respond |

Measures how fast you find and fix governance problems. |

|

Collect Audit Evidence |

Involves saving logs and records to match with rules and laws. |

|

Review Enforcement Effectiveness |

Checks if your written rules match what happens in real life. |

Boards use explainability and red-teaming to test ai. They make reports that show what ai does, why it does it, and who fixes problems. They set release gates so ai cannot go live until it passes all checks. They watch for alerts and use key metrics like jailbreak rate and time to fix issues.

|

Evidence Type |

Description |

|---|---|

|

Executive Summary |

Gives a quick look at top risks, business impact, and what needs fixing first. |

|

Findings Catalog |

Lists test cases, inputs, outputs, and model details with timestamps. |

|

Risk Rating & Owner Map |

Shows how serious each risk is and who must fix it. |

|

Remediation Roadmap |

Lays out quick fixes, medium-term steps, and long-term changes. |

|

KPIs |

Tracks things like jailbreak rate and time to fix problems. |

|

Release Gates |

Sets rules for passing tests before going live. |

|

Monitoring & Alerts |

Maps key signs to alerts for fast action. |

|

Culture |

Builds a team spirit that learns from mistakes instead of blaming people. |

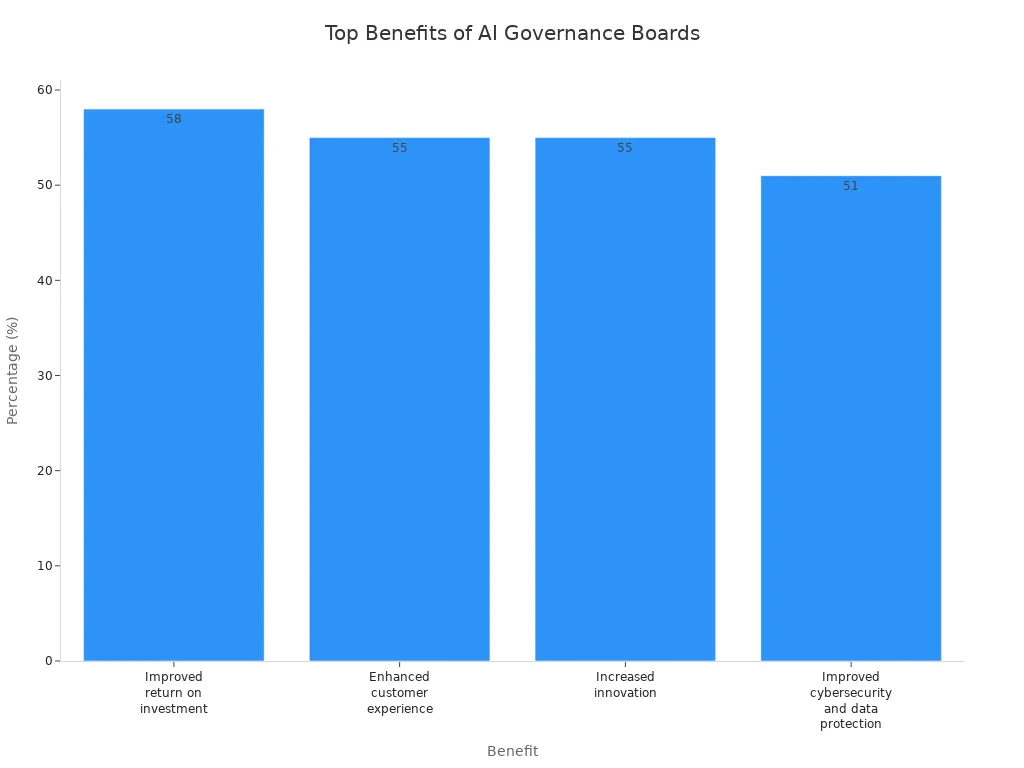

You get real benefits from ai governance boards. Surveys show companies with strong ai oversight do better.

-

Happier customers (55%)

-

More new ideas (55%)

-

Stronger cybersecurity and data protection (51%)

-

Work gets done faster (46%)

-

More value created (58%)

Ai governance boards help you find ai risks before they grow. They use real-time data and ai-powered tools to look for problems. This helps you act fast and keep your business safe. You save time and collect better data, which helps your compliance team give better advice.

When you use conversational ai, you need these guardrails. Boards make sure your ai follows the rules, protects your data, and treats everyone fairly. They help you build trust with customers and keep your business strong.

Key Functions of AI Governance

Risk Management and Mitigation

You face new risks every time you use ai in your business. These risks can come from bias, data leaks, or even attacks that target your systems. Ai oversight helps you spot these problems early. You need to build a strong ai strategy that covers every step, from planning to daily use.

Industry experts say you should focus on these main functions for ai governance:

-

Set a clear ai strategy that matches your company’s goals.

-

Build an ethics and governance plan to keep ai fair and open.

-

Adjust your risk plans to include ai-specific issues like bias and data quality.

-

Find new ways ai can help your business grow.

-

Create a roadmap with clear goals and ways to measure success.

-

Prepare your team with training and open talks about ai.

-

Think about how ai affects the world and your community.

You need to use trusted frameworks to manage ai risks. Many companies use COSO, ISO 31000, and NIST to guide their ai oversight. These frameworks help you handle security, data quality, and fairness. Ai oversight also means you must work with cross-functional teams. You bring together experts from compliance, technical, and legal areas to review every ai project.

Here is a table of common risk mitigation strategies:

|

Strategy |

Description |

|---|---|

|

Data governance |

Checks for bias, keeps data safe, and follows privacy laws. |

|

Explainability tools |

Makes ai decisions clear so humans can check them. |

|

Security teams |

Protects your systems from attacks and other security challenges. |

|

Cross functional teams |

Brings together different experts for better ai oversight. |

|

Impact assessments |

Looks at how ai affects people and checks for legal risks. |

You must remember that ai oversight should help you use ai responsibly, not slow down your progress. Piloting new programs and finding champions inside your company can help you build trust in your ai strategy. When you use ai, you must always think about security and how to stop security challenges before they grow.

Compliance and Regulatory Alignment

You need to follow strict rules when you use ai, especially in high-risk areas. Ai oversight helps you meet these rules and avoid fines or legal trouble. Your ai strategy must include steps for compliance with laws like the EU AI Act, GDPR, and CCPA.

Here is how ai governance boards help you stay compliant:

|

Evidence |

Description |

|---|---|

|

Structured Oversight |

Boards set up clear rules and track everything to meet the EU AI Act by 2026. |

|

Risk Management |

You must check data quality, model accuracy, and bias all the time. |

|

Vendor Compliance |

You need contracts that make sure your vendors follow the same ai rules and allow audits. |

You should follow these steps to align with global data protection laws:

-

Run ai risk checks to find problems with your data and models.

-

Set up internal ai oversight teams and clear rules.

-

Audit your ai systems often to check for bias and fairness.

-

Make sure your ai follows privacy laws in every country where you work.

-

Keep logs and records for all high-risk ai uses.

-

Train your staff on ai ethics and legal rules.

-

Work with legal experts to keep up with new ai laws.

Ai oversight gives you the tools to show regulators that you take compliance seriously. You can use audit logs, explainability reports, and regular reviews to prove your ai strategy works. This builds trust with customers and keeps your business safe from legal risks.

Continuous Monitoring and Improvement

You cannot set up ai and forget about it. Ai oversight means you must watch your systems all the time. You need to track how ai works, spot problems, and fix them fast. This is a key part of your ai strategy.

You should use these steps for continuous improvement:

-

Track key metrics to see how well your ai works.

-

Get feedback from users to find new problems or ways to improve.

-

Run regular risk checks and model reviews to catch mistakes early.

-

Use frameworks like NIST to guide your monitoring and updates.

Here is a table showing the main stages for ai oversight:

|

Workflow Stage |

Description |

|---|---|

|

Intake & Planning |

Write down your goals, who is involved, and what could go wrong. |

|

Development & Testing |

Set data needs, test your models, and check if they meet your standards. |

|

Review & Approval |

Have experts from different teams check your work before launch. |

|

Deployment |

Roll out your ai slowly and watch for problems. |

|

Monitoring & Maintenance |

Keep checking your ai for drift and other issues. |

|

Retirement |

Plan how to safely shut down ai systems when you no longer need them. |

You should also keep good records. Use model cards, data lineage logs, testing reports, decision logs, and incident reports. This helps you remember what happened and makes it easier to fix problems. Ai oversight means you always look for ways to make your ai better and safer.

Tip: When you build your ai strategy, make sure you include regular reviews and updates. This keeps your ai safe, fair, and ready for new challenges.

You need to treat ai oversight as a living process. Your ai strategy should grow as your business and the world change. This helps you stay ahead of security challenges and keep your ai working for you.

Implementing AI Governance Boards

Overcoming Common Challenges

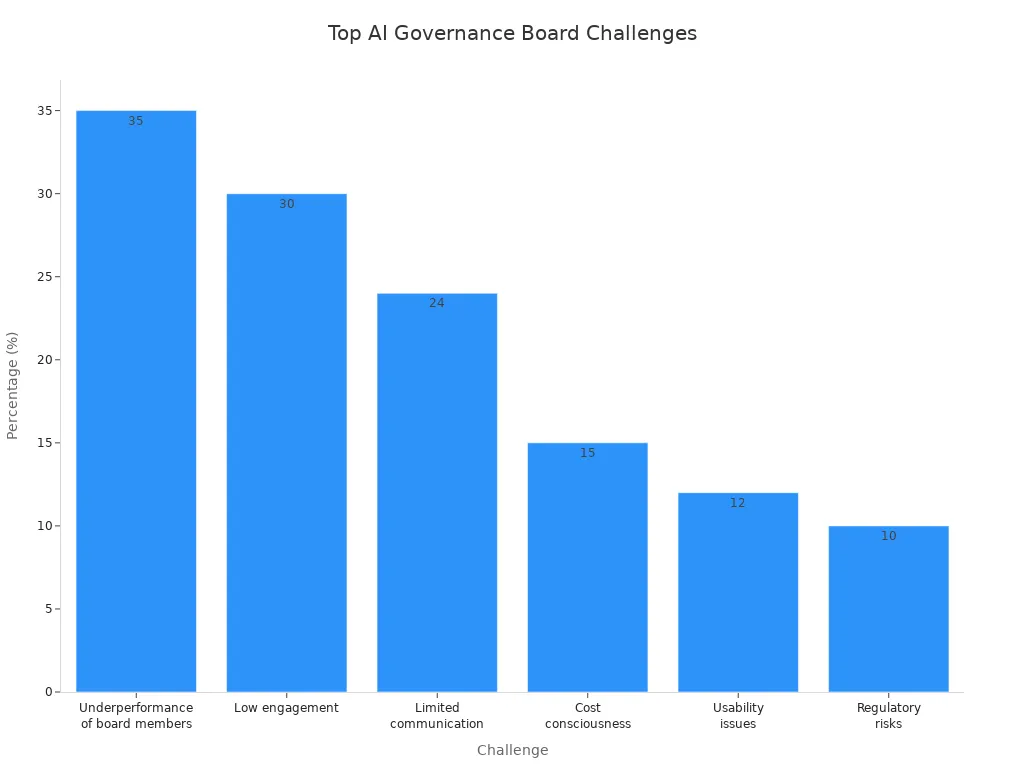

Setting up an ai governance board is not easy. Some board members do not do their jobs well. Others do not join in enough. Sometimes, people do not talk to each other. You might worry about spending too much money. Usability problems can show up. There are also risks from rules and laws. Here is a table that shows the most common problems and how often they happen:

|

Challenge |

Percentage (%) |

|---|---|

|

Underperformance of board members |

35 |

|

Low engagement |

30 |

|

Limited communication |

24 |

|

Cost consciousness |

15 |

|

Usability issues |

12 |

|

Regulatory risks |

10 |

You also need to look out for bad data, bias, and security problems. Vendor risks and skills gaps can cause trouble too. When you use ai and chatbots, you must keep people’s trust. You need to protect your business from ethical and legal problems.

To get past these problems, you can:

-

Use automation to find and sort things.

-

Pick the biggest risks to fix first.

-

Try smart automation for small changes.

-

Use what you already have.

-

Add ai steps slowly for better results.

-

Help teams work together.

-

Ask outside ai experts and citizen groups for fairness.

Best Practices for Effective Governance

You need good habits to make your ai governance board work. Start with a simple checklist:

-

Find the most important areas for ai governance.

-

Decide what “ready” means for each area.

-

Set up a basic governance layer early to check privacy and fairness.

-

Keep a list of ai models and datasets.

-

Write easy-to-follow rules for building and checking ai.

-

Choose people to watch safety, privacy, and compliance.

-

Make a chart that shows who does what for security.

-

Plan for changes, like new versions or rolling back.

-

Watch important numbers while ai runs, like speed and drift.

-

Keep safe audit logs that do not show private info.

-

Make a plan for what to do if something goes wrong.

Regulatory frameworks like the EU AI Act and NIST AI Risk Management Framework help you organize your board. Here is how NIST explains the main jobs:

|

Core Function |

Description |

|---|---|

|

Govern |

Set rules, roles, and who decides for ai systems. |

|

Map |

Make ai rules, set up boards, and pick control owners. |

|

Measure |

Write down ai rules and risk levels. Give jobs for data quality and approvals. |

|

Manage |

Check processes with clear limits and watch for risk changes. |

You must keep people in charge. Always ask for human approval on big choices. Never let ai work without someone checking it. People must be responsible for what happens. Remember, smart systems help, but people still need to make the final call.

Smart systems can help you. Competing systems make a lot of noise. Real-world judgment is still important. You need someone in control, someone who knows when ai makes a mistake, ignores the noise, and makes the right choice when it matters.

If you follow these steps, people will trust your ai and chatbots. Your business will be safe and ready for new changes.

You need an AI governance board to keep copilots and chatbots safe. Studies show the biggest risks come from data and society. You must protect data and lower bias. You also need to share responsibility. Companies now use AI governance as a main part of their plans. They mix new ideas with strong rules to build trust.

|

Key Insight |

Description |

|---|---|

|

Risks of AI |

The biggest risks are complex and come from data and society. |

|

Governance Complexity |

Real AI governance means protecting data, lowering bias, and sharing responsibility. |

|

Ethical AI |

Businesses must make sure AI is fair, clear, and follows rules. |

|

Internal Governance |

Groups set up AI governance boards to watch over fairness and lower risks. |

|

Strategic Capability |

AI governance is now a key skill, not just a process. |

|

Innovation and Governance |

Groups mix governance with new ideas to make AI fair and responsible. |

You can make your AI governance board stronger by doing these things: Make AI oversight a regular part of board meetings. Build teams with people who have different skills. Train board members with help from experts. Use local and regional rules to guide your board. Support new ideas and local projects.

Tip: Put governance first when you add more AI to your business. Strong oversight helps you avoid mistakes and build trust.

FAQ

What is the main job of AI governance boards?

You use AI governance boards to check every AI project. These boards review risks, set rules, and make sure your AI works safely. They help you follow laws and keep your business safe.

How do boards help with AI risks?

Boards look for problems like bias, data leaks, and attacks. They use tools to test AI and watch for mistakes. You get alerts when something goes wrong. Boards help you fix issues fast.

Why do you need so many boards for AI projects?

You need many boards because each board checks a different part of your AI. Some boards focus on data, others on fairness, and others on security. This way, you cover all risks and keep your AI safe.

How do boards make sure AI follows the rules?

Boards use checklists and logs to track every step. They check if your AI meets laws like the EU AI Act. Boards keep records and review your AI often. You can show proof if someone asks.

Can boards help you improve your AI over time?

Yes, boards help you learn from mistakes. They collect feedback and suggest changes. You can use their advice to make your AI better and safer. Boards support you as your AI grows.